demo

A Deep Dive Into Our DeepLens Basketball Referee

- The goal of the Basketball Virtual Referee is to make games easier for players by allowing them to focus more on playing the game itself—and less on tracking shots, memorizing scores, and detecting fouls.

Basketball not only causes broken ankles, but it can also cause broken relationships. From arguments about ambiguous fouls to debates over two- or three-pointers, pick-up games can turn into long-term feuds. Enter our Basketball Virtual Referee.

The goal of the Basketball Virtual Referee is to make games easier for players by allowing them to focus more on playing the game itself—and less on tracking shots, memorizing scores, and detecting fouls (nothing beats video replay capabilities).

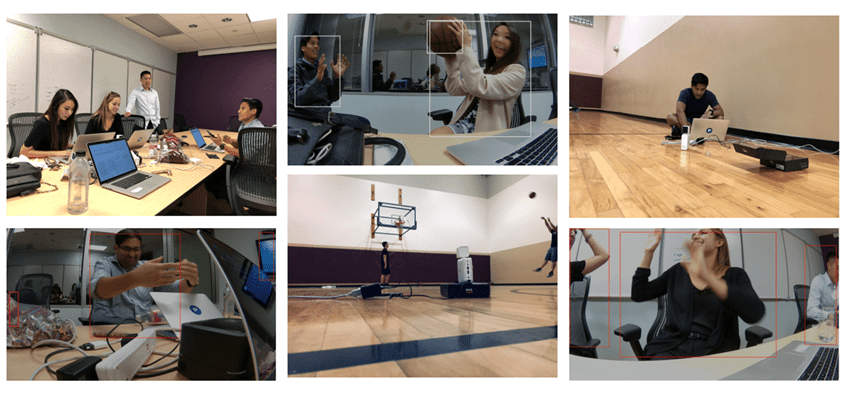

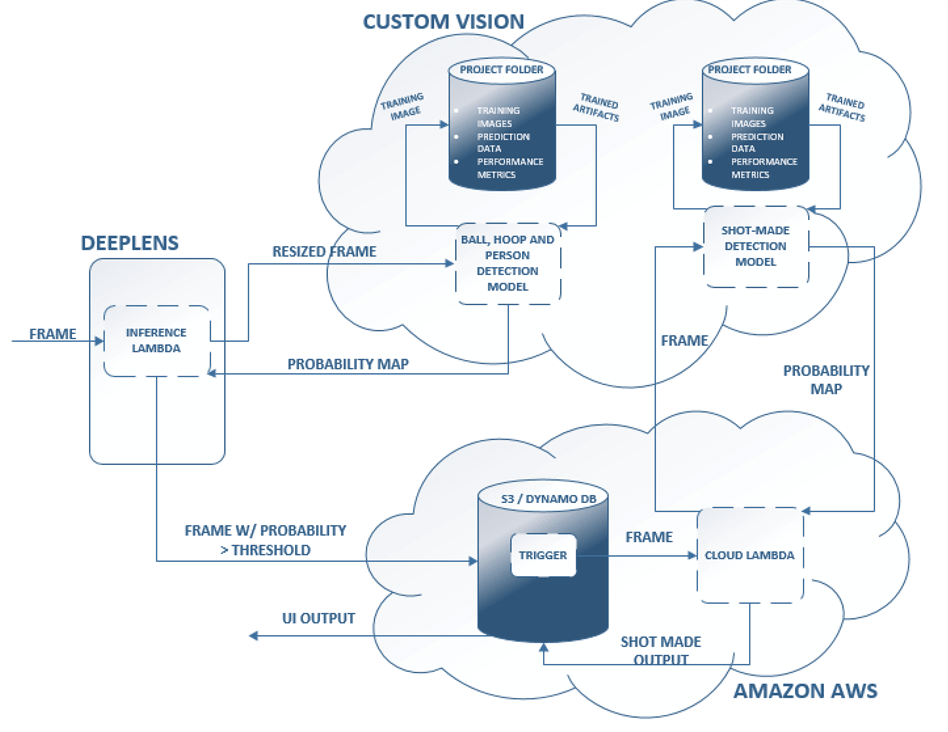

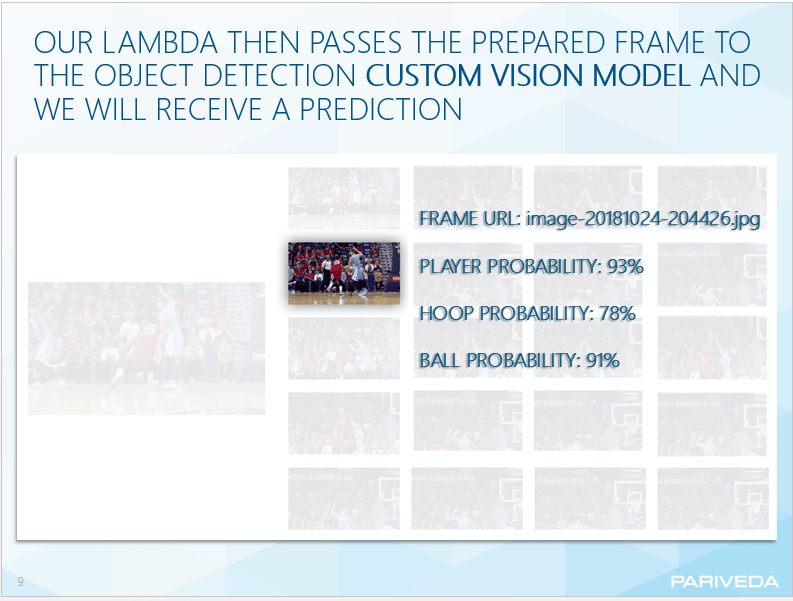

For our first iteration, the team focused on shot detection. The team trained a Microsoft Custom Vision model to recognize a human, basketball, and basketball hoop and a second model to detect the basketball entering the hoop. An AWS Cloud application utilizes these machine learning models to identify DeepLens frames containing the desired objects, passes the images and their metadata through S3 and DynamoDB, and eventually outputs when a shot was made to the UI.

What is DeepLens?

AWS DeepLens is a wireless-enabled video camera and development platform integrated with the AWS Cloud. It lets you use the latest Artificial Intelligence (AI) tools and technology to develop computer vision applications based on a deep learning model.

DeepLens is similar to the AI-powered Google Clips camera, but while Clips is targeted at consumers, DeepLens is a new, exciting tool for developers. According to Amazon’s website, it’s the first video camera designed to teach deep learning basics and optimized to run machine learning models on the camera.

What is Microsoft Custom Vision?

The Custom Vision service uses a machine learning algorithm to classify images. You, the developer, must submit groups of images that feature and lack the classification(s) in question. You specify the correct tags of the images at the time of submission. Then, the algorithm trains to this data and calculates its own accuracy by testing itself on that same data. Once the model is trained, you can test, retrain, and eventually use it to classify new images according to the needs of your app. You can also export the model itself for offline use.

How we created it

Below is what we used to create the Basketball Virtual Referee with AWS DeepLens:

- An Amazon DeepLens device

- An AWS account

- A Microsoft Custom Vision account (free)

- A laptop or computer

- Hands or other means of interacting with the computer

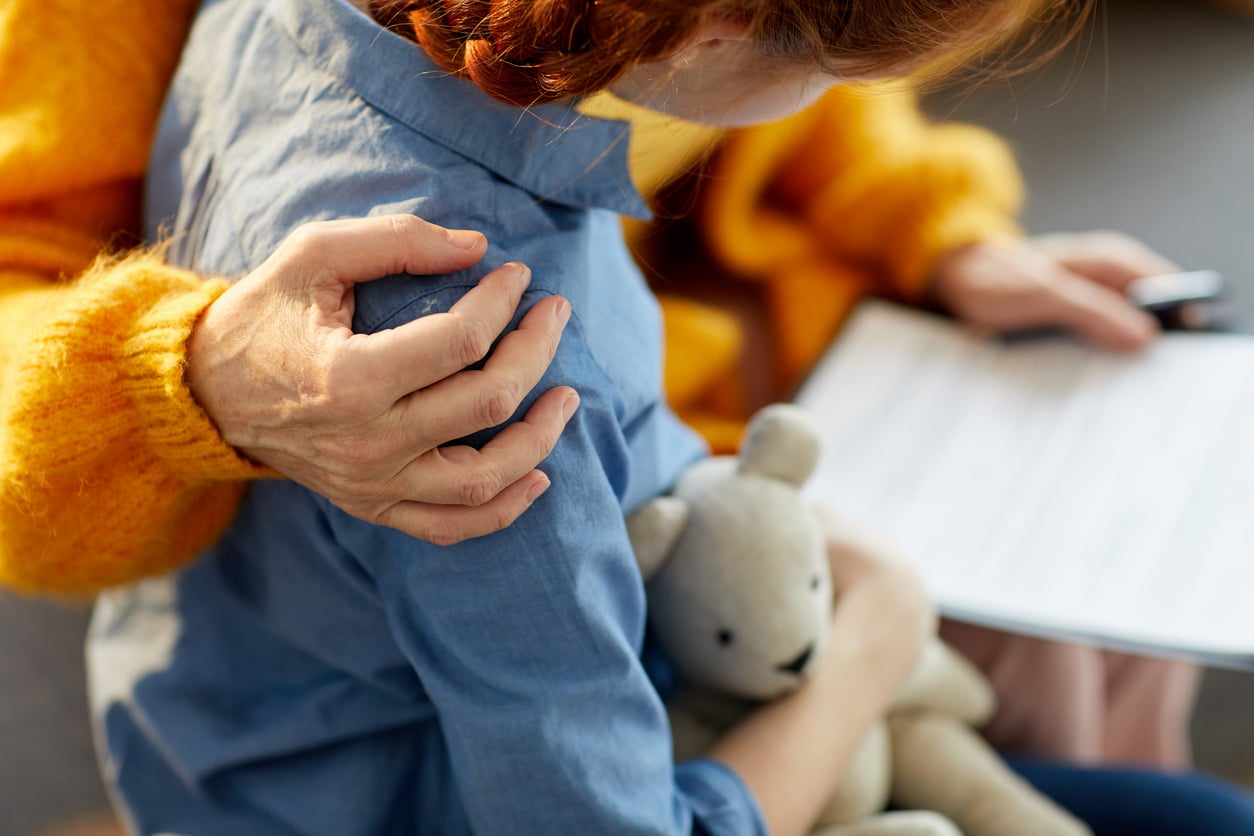

Behind the scenes with the project team: Mogi Patangan, Muhammad Naviwala, Sofia Thai, Brittany Harrington, and Alex Tai

Architecture

Technology stack

VIDEO DEMO

Watch the video to learn more about how Pariveda used AI to allow players to focus more on playing the game itself—and less time on tracking shots, memorizing scores, and detecting fouls

our solution

Training the object detection custom visual model

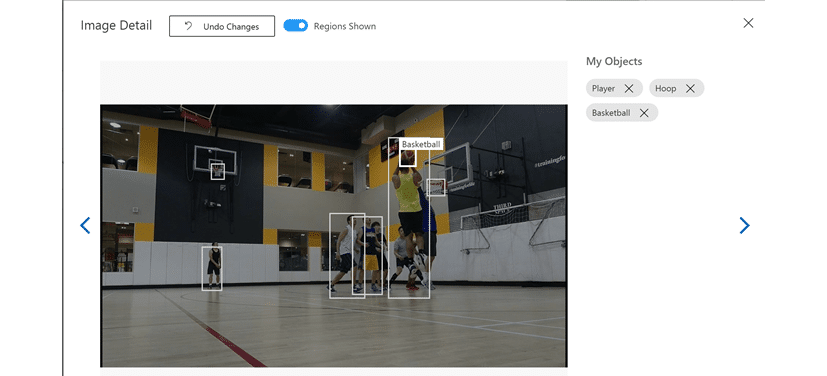

First, we gathered images of humans, basketballs, and basketball hoops. They could be separate images or all in the same photo.

Once we had our images, we created a free Microsoft Custom Vision Account: https://www.customvision.ai/

Here, we trained our object detection model to recognize your three objects (player/human, basketball, and basketball hoop).

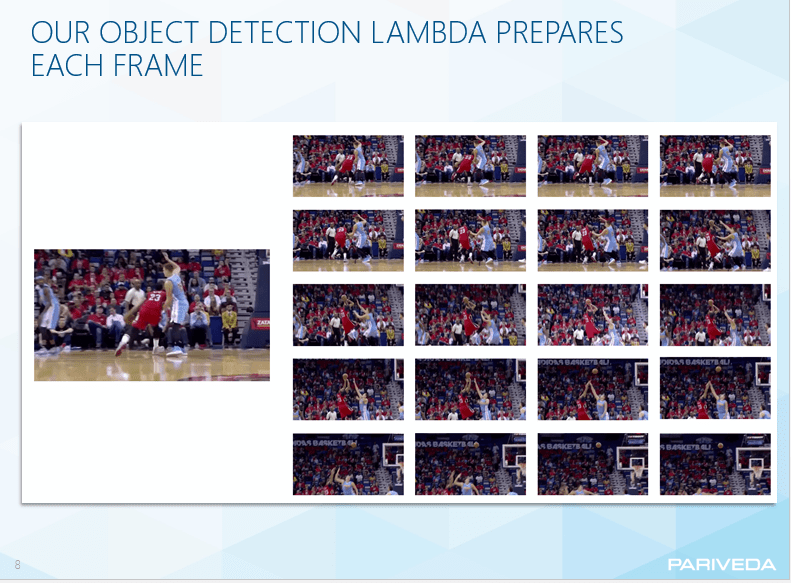

Once the model was trained with a high enough precision and recall, we needed a way to have our DeepLens camera feed pass into the Custom Vision model. A video feed is just a series of frames, so we needed to pass each frame into the model to detect the three objects.

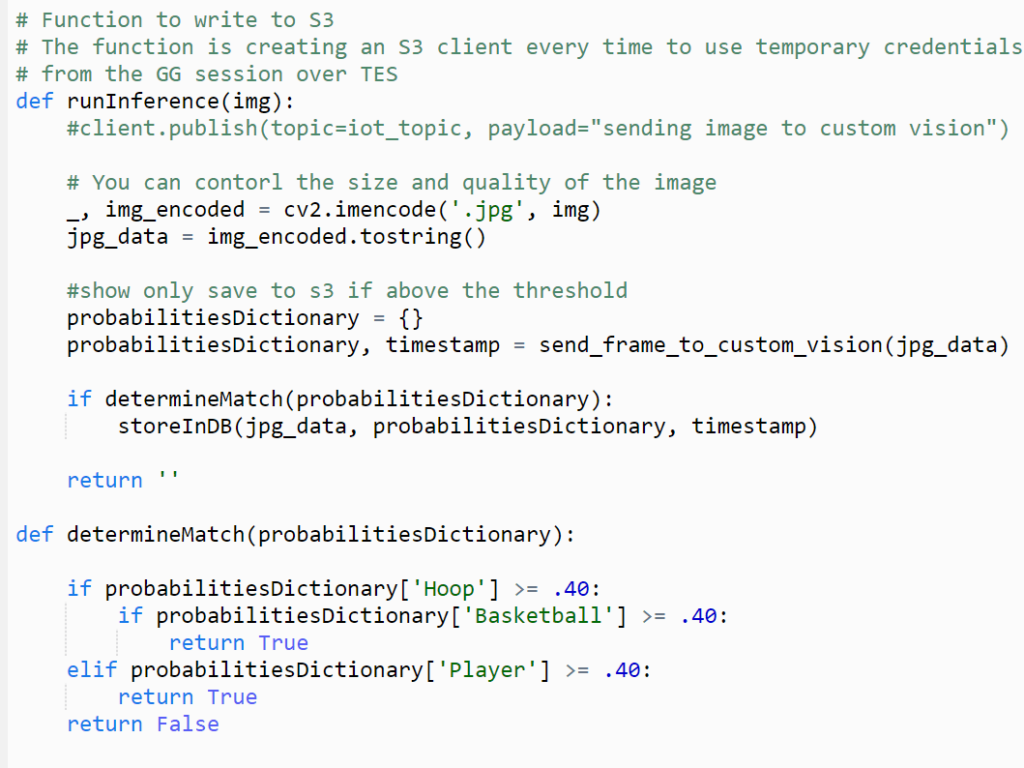

You can do this in many ways. We chose to utilize AWS Lambda because the DeepLens is part of the Amazon suite. To learn more about creating one, use this link for a tutorial.

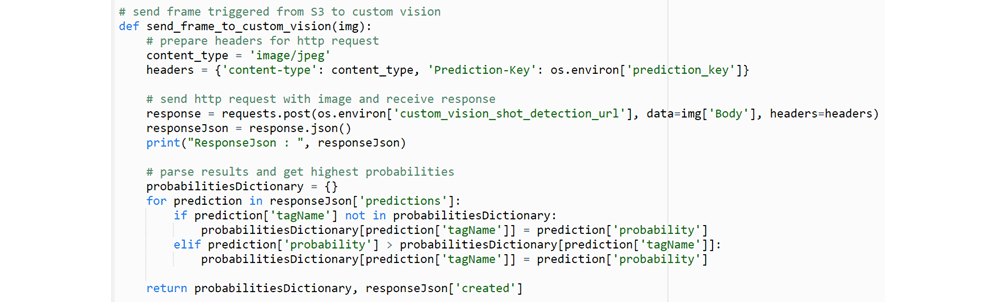

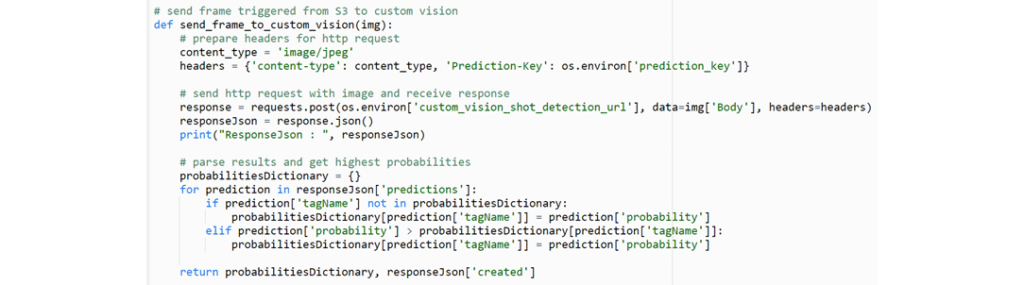

Below is a snippet of how we hit the Custom Vision API and retrieved the probabilities back.

The output looks like this:

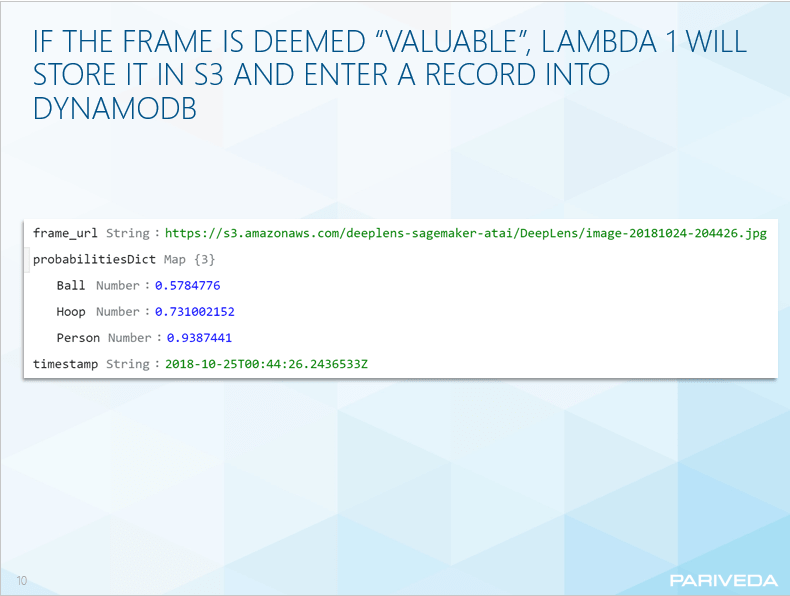

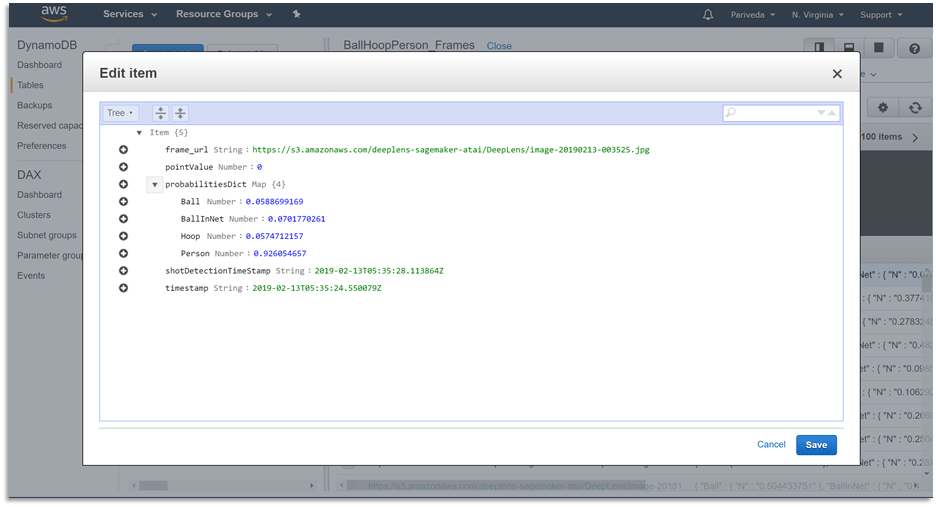

Next, if the probability of a hoop and basketball being in the image is above 40 percent, we store the frame into an S3 instance and insert a new record into DynamoDB (if you don’t have an S3 spun up rel=”noopener noreferrer” yet, feel free to create one now). The DynamoDB record contains attributes about the frame, but yours can be different. Ours had the frame URL, probabilities dictionary (probability of a ball, hoop, and player), and timestamp.

Going back to the lambda—if there is a probability of 40 percent or more that a player is in the frame, store the frame into S3 (regardless of the hoop and basketball probability). Feel free to change your thresholds as needed for your product and model performance. We did this to keep the system as modular as possible, so we could use this output to detect player improvements and eventually team-level feedback.

Shot detection

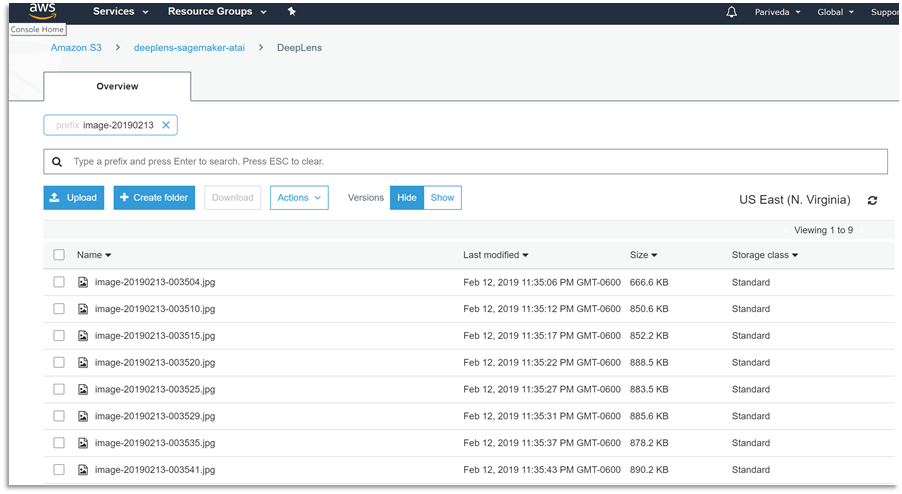

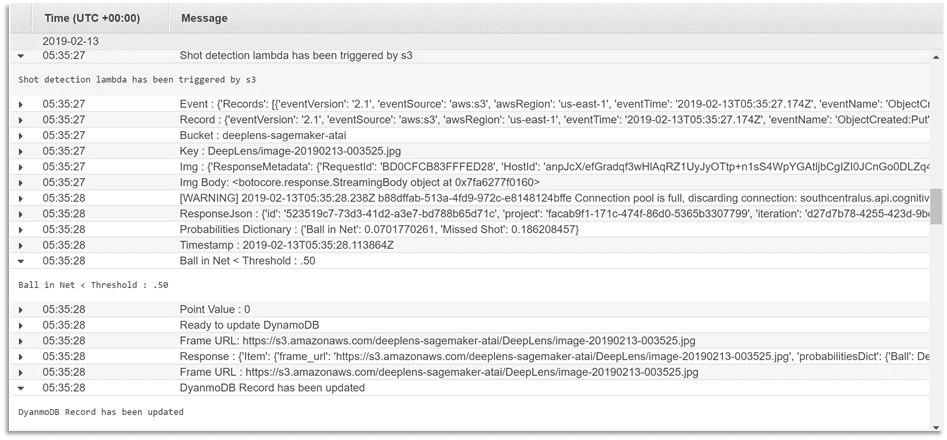

Next, we had a separate lambda that is automatically triggered from the S3 upload that just occurred. Below is what your S3 will look like once you have images in there.

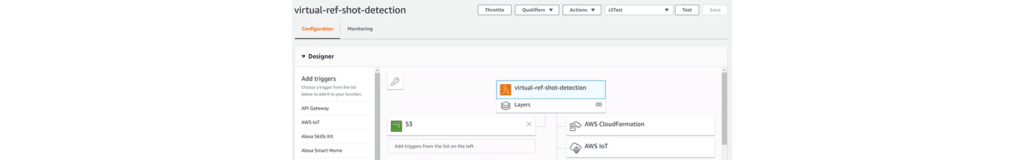

To create a trigger for the second lambda (shot detection), we just needed to drag the S3 module to the left and fill out the necessary configurations.

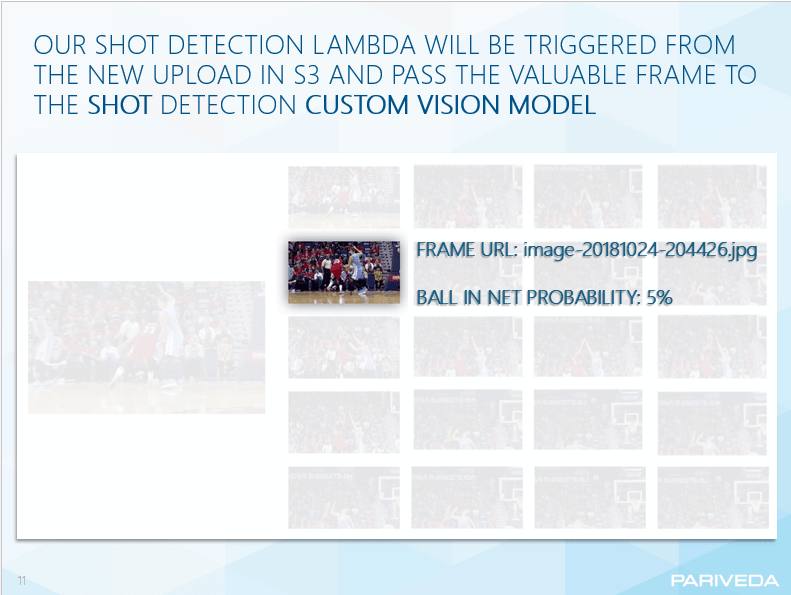

Next, we pass the frame that triggered the lambda into our 2nd Custom Vision Model to predict a successful shot. Again, the reason why we split these up is to allow for future features of player-level or team-level analytics.

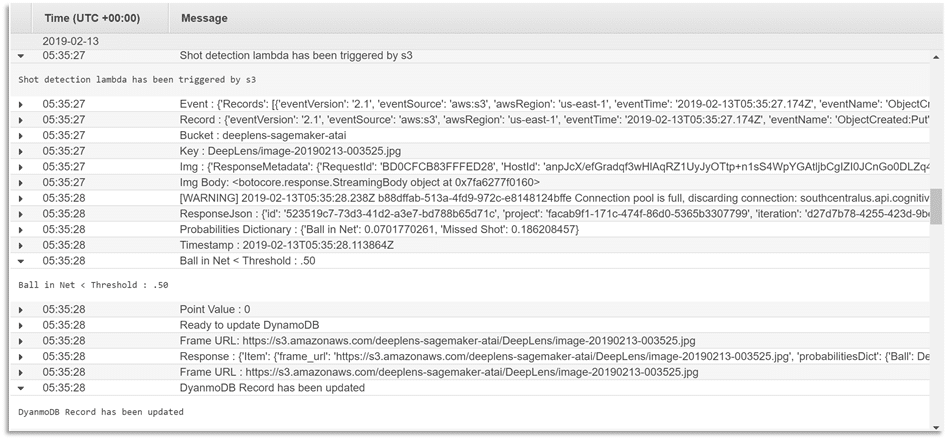

This is what the output of the shot detection model will looks like in our logs:

If the probability that the frame had a successful shot is more than or equal to 50 percent, update the DynamoDB record to include a point value of 1. If it’s less than 50 percent, update the record with a point value of 0.

Here is the final DynamoDB output:

conclusion

Overall, the DeepLens was an easy tool to get started with and allowed us to prove our concept. Since the DeepLens has a low frame rate relative to other video processing tools, however, it can easily miss critical moments. For Team Finesse, an internal initiative creating small workable POCs with emerging technologies, it was a challenge to capture the continuous set of frames tracking a ball’s progression from the player through the hoop. As the next step, the team is interested in replacing the DeepLens video feed with that from an iPhone.

Case Studies

Explore our success stories

INSIGHTS

Article

Perspectives

Article

Perspectives